I did a speedrun of “100 days of code” in Replit using AI so I figured I’d write about what I learned. I am not a true beginner so this wasn’t starting from scratch, and my goal wasn’t to learn code so much as learning “how to use AI to code” and not rely on manual coding as much as possible. It was an interesting experience and changed how I think about how to use these tools.

Some reflections:

Importance of PRDs / requirements

“Coding with AI” mostly means typing up clear requirements and then using AI to write the code. Being able to articulate the desired output and all of the specific details in precise language is the key. This really reminded me of PRDs (Product Requirement Docs) but much lighter weight.

Tactically what this means is that when I use AI I will create a new text doc that I can use as a PRD. In this doc, I write a long list of my requirements and edit it to be clear for the AI. I can then take this text and generate the code. I then check the output, edit it and repeat.

I think of this as dream → specify requirements → <use ai> → edit output & repeat

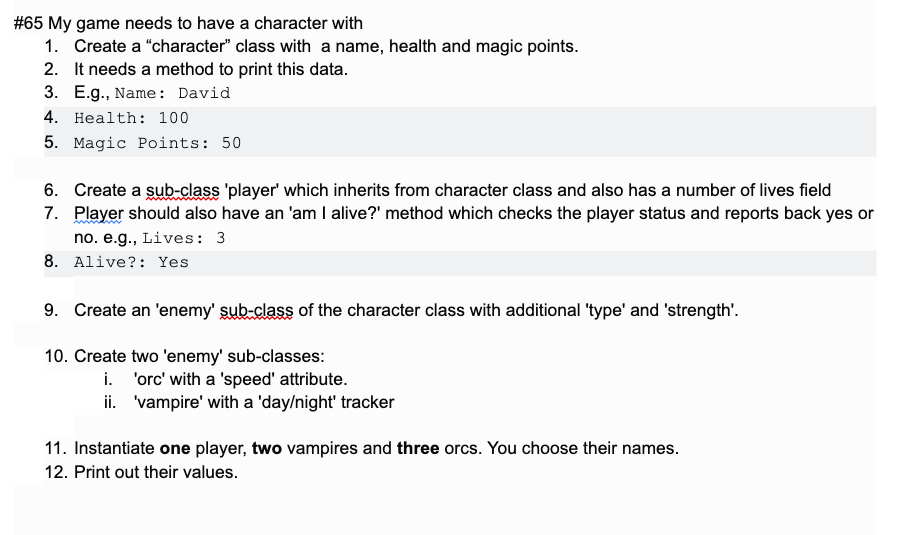

Here’s an example simple mini-PRD for a simple program for day 65

Beware AI complexity - i.e., if it’s beyond you, it’s going to be hard to debug

If you’re using stuff you don’t understand, then dumb it down first - go slower piece by piece and decompose it (the more modular everything is, the easier to debug using AI)

As code gets more complex, moving in steps becomes more important - you have to think about the structure and then move in sections; you also need to tell it what database to use, or how to format dates, etc. Being very literal is important. One approach I liked was adding comments where the subroutines or classes should go and then having it complete the code from there.

In the PRD I find it’s helpful to ask for comments and then be very specific about the level of detail you want to see. If it’s too broad you risk getting code that is beyond your understanding – yes, you could ask the AI to explain it but if you hit a bug you’re going to be in for a world of pain.

If you find the code that the AI outputs is too complex, refine your prompt to make it simpler. Always make sure you understand the code, can debug it and that it’s doing what you want without errors. Ask the AI for tests.

Demos are easy - solid programs are hard

Writing a prompt to get AI to make a simple demo is trivial. Getting AI to write robust code that has tests, is easy to understand, is well designed and modularized to your liking is much harder.

Architect the PRD

When you are making the PRD it’s helpful to have an overall architecture in mind and separate big goals from little ones. Doing one-shot prompting to get a large program is fine for a demo but not the way to build anything that you want to expand on in the future. It’s better to think about the overall structure and then make prompts/PRDs for each of the pieces of the program.

For example, if you’re creating a blog you’ll have the login area, the home page, the pages themselves, the design, the navigation, etc. Each of these is a “big piece” of the puzzle. Within each, you can then make little plans / mini-PRDs for what you’d like to see. Separating out these prompts and then prompting individually is helpful to manage cognitive load and make sure you’re tracking the code.

Tactically, for Big Plans I keep track in my separate doc: this is what I want to happen, here is how it should work, here are some suggestions for how to code it up, etc. For Little Plans, I can keep track in the doc or just prompt directly in the editor (e.g., I’ll add a comment to the code and then ask the AI to complete the code in situ)

Value of creativity and judgment:

Since so much comes down to the PRD itself, knowing what you want is of the utmost importance. This is similar to Rick Rubin’s whole riff on the importance of judgment and taste; Knowing what to ask & knowing where to push becomes key.

The UX of AI tools really, really matters

Replit has a bunch of AI affordances and it made me really appreciate how impactful AI-invoking UX is. It’s actually pretty annoying to have to copy code from another editor into the IDE. Being able to invoke AI directly in the code makes a huge difference.

Tactically in Replit this means that you can highlight some code and press Command-I to then prompt AI directly on the code. Additionally, in Replit when you invoke the AI in-line it gives you green/red +/- diffs that show you what the suggested edits are. This is infinitely better than moving back and forth between ChatGPT and the editor.

It’s also amazing to have in-context “AI Explanations” - just highlight the text and with low latency it can show what it all means. This is similar to non-AI dictionaries and wikipedia in Kindle but better explanations because they’re in context. The AI explanations are verbose though so I think there’s a bunch of room to improve this.

All of this said, AI coding UX is not yet good enough to manage the mental overhead of navigating large projects. AI still feels like an add-on. It’s early days here so having a separate doc to help manage your process is still important.

Knowing how to code will only grow in value:

AI is still not at the point you can “not know how to code” - as soon as you hit a bug in your code the AI stops being quite helpful (especially for complex code across files) - just “adding in code” gets you in deep water pretty fast if you really want to use the code later on… But if you put it in little by little and understand what it does / comment / test it more, then you can do a lot more.

All that said, it pays to know how to code - the kid-that-coded-a-harry-potter-chatbot video notwithstanding. Demos are easy. Stable software is hard. Models will become better but knowing how to code has never been more valuable.

Not knowing “what there is to know” is one of the main hurdles:

The more you know end-to-end programming lifecycles, the more you can imagine what types of things to ask AI to do for you and the more you can process the results. However, if you don’t even know what to ask, you stall. There’s value in knowing the territory. For example, if you’re coding a video game it’s helpful to know what are the main areas one would implement, what types of things you should ask for. AI is pretty helpful in teaching this to you but that’s a separate process… Broad knowledge of end-to-end processes and common mistakes is super valuable.

Knowing how to type quickly, having high computer literacy, reading quickly and knowing how to use the internet for learning will be essential: all of these are key skills that unlock using AI. It makes me wonder how to do a better job teaching this to my kids…

All of these points are in the context of code but many apply to non-code AI-projects as well. The Architect / PRD points in particular I am finding super helpful for large non-code projects. “Just staying in Claude/GPT” is limiting for big projects since project management quickly becomes infeasible in the chatbot UX constraints.

That’s it. Now I’m off to write some PRDs for my AI :)